A lesson from the FISK Arrival

It’s July, an apt time to reflect on a word that evokes a range of reactions from aviators. Some grin like Disney characters. Some smile at sepia-tinted memories. Some roll their eyes. All react on hearing “Oshkosh.”

More formally known as “EAA Airventure,” the week(ish) long convention, hosted by the Experimental Aircraft Association (and universally referred to by the name of the nearby Wisconsin city), has a scale that is difficult to describe to the uninitiated. In a given year, roughly one tenth of the world’s airworthy fleet will pass through Oshkosh, its relief airports, Appleton and Fond Du Lac, or the associated seaplane moorings on nearby Lake Winnebago. Seven hundred thousand people pass through the grounds at some point, making it one of the largest multi-day gatherings in North America.

Virtually every aircraft manufacturer, parts maker, and purveyor of aviation services, lofty charities1, bad investment ideas, and questionable inventions will put up a display booth somewhere. The second-hand flea market (ahem – “fly market”) occupies enough land to play a decent polo match. Over 1,000 seminars and courses are offered, covering everything from welding titanium to obtaining diplomatic clearance to overfly Burundi.

Aerobatic airshows occur daily and often around the clock, including night-time wing-attached flying pyrotechnic displays – plural. All military services are represented, and typically multiple military demonstration teams perform throughout the week: a Thunderbirds AND Blue Angels double-header is not uncommon. Record breakers and industry leaders deliver keynote addresses to shoulder-to-shoulder standing audiences. Friends are made and bratwursts consumed by the metric ton.

Among the aviation-minded, the above explains the Duchenne smile reactions. So why the rolled eyes, grimaces, and oaths of “never again”?

Those 10,000+ participating aircraft, from “parachute-with-a-fan” to jumbo-jet?

They all arrive at roughly the same time.

The International Air Transport Association monitors the busiest air traffic control towers around the world, with Atlanta’s Hartsfield-Jackson, Chicago’s O’Hare, and London’s Heathrow usually at the top of the list. The IATA also notes that for one week in late July, these large facilities with many staff serving global commerce hubs drop in ranking to fall behind a small city in eastern Wisconsin.

Control into Oshkosh is chaotic, but it’s highly organized chaos. Participating as an FAA controller is a competitive honor to be vied for, and hundreds of the FAA’s finest (clad in bright pink shirts for easy identification) conduct a symphony in the sky. Communications are one-way: controllers talk and aviators listen; to do otherwise would congest the 15+ radio frequencies to the point of non-usability. Aircraft are funneled into one of Oshkosh’s five paved runways, two unpaved runways, many helipads, and seaplane lanes – in some years, an airship mooring makes the scene. Landings are done concurrently. Colored dots, painted every 1000’ along the runways, allow for simultaneous touchdown of up to three airplanes in a prom-conga-line that moves in three dimensions and at 90+ miles per hour.

The first time navigating this arrival is the worst kind of sensory overload. Straining to see scores of airplanes in the afternoon’s glare, knowing there are many more you can’t see, while maintaining an exact altitude, airspeed, and listening for radio calls that sound like an auctioneer’s midnight assignation with a New York City dock inspector: “Red’n-white-haw-wing-proceed-rail’tracks-t’-2-7-right-turn-only-ROCK-YOUR-WINGS-IF-YOU-INTEND-TO-COMPLY!”

But the striking thing is this system WORKS, and here the observations in risk management begin.

Before delving into specifics, a quick review of the Crew Resource Management SHELL model will help us understand the two critical elements that enable a task as inherently hazardous as 10,000 of the world’s aircraft descending on a small Wisconsin town to execute with a remarkable safety record.

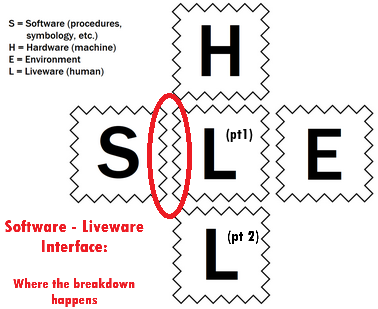

The SHELL model describes the five principal contributors to crew risk management, and equally important, their interfaces with each other:

- Software – the established processes and procedures a crew uses to operate an aircraft. Examples include checklists, laws, FAA procedures and regulations, manufacturers’ manuals, etc. Not to be confused with electronics or the digital instructions upon which electronics run.

- Hardware – the airplane, and anything non-living within it, such as avionics. This is where the electronics and any digital spirits resident within are considered.

- Environment – the geographical, meteorological, spectral, or other non-human elements outside the airplane. Includes things like mountains, hailstones, global pandemics, solar flares, airline hiring freezes, and errant birthday balloons.

- Liveware (pt 1) – the crew. Living, breathing humans with the strengths and weaknesses inherent thereto. Can be one person, and for most Aircraft arriving into Oshkosh is. Ostensibly, operates hardware in an environment per the processes of software.

- Liveware (pt 2) – Those humans who are not the crew, such as controllers, passengers, dispatchers, etc. Cooperates with the crew and possesses a similar set of inherent strengths and weaknesses. Other people’s aircraft (manned and otherwise) are usually considered here.

As drawn above, Liveware (pt 1) – a.k.a the crew2, lies at the center of a four-leaf clover, with boundaries to the other elements that surround them. The key to the SHELL model is that we can exercise options to control for risk inside each block. Better checklists can be written, more modern aircraft purchased and maintained, weather and terrain avoided, crews trained (and recurrently retrained), and the same for non-crew. Interestingly, most manifested hazards (read: catastrophic crashes) occur at the zig-zag intersections of SHELL elements. A pilot flies into poor conditions AND isn’t instrument qualified. A UH-60 Blackhawk is equipped with an ADS-B out transponder that would make it visible on the navigation displays of nearby aircraft, BUT its crew doesn’t turn it on. By focusing on hazard reduction at the SHELL elements that we can control, and recognizing the weakness at the interface, the hazards that are manifested can be reduced to an astonishingly low level.

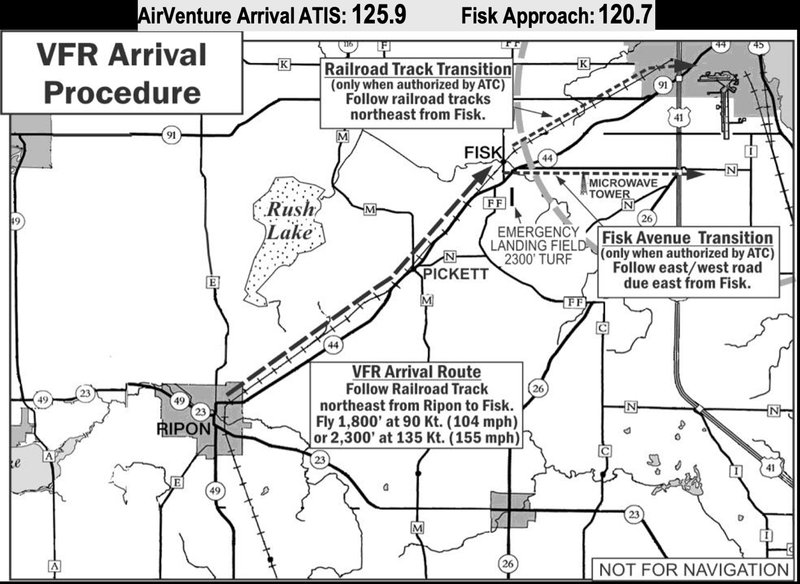

Which brings us back to the first element of why Oshkosh works. Every day, the FAA updates Software (in the SHELL sense) through publication of special instructions to aircrew in quick missives known as NOTAMs – or Notice to Airmen3. These are typically 1-2 paragraph notifications that inform of considerations such as a closed runway, poor braking, or an increase in nearby geese. Once a year, one of these NOTAMs establishes special navigation procedures for arrivals into, and equally dense (and often concurrent) departures from Oshkosh.

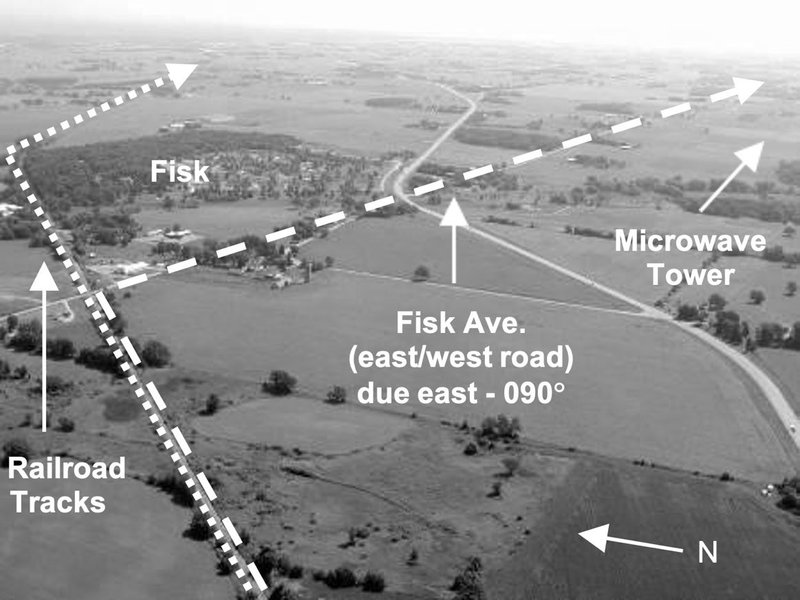

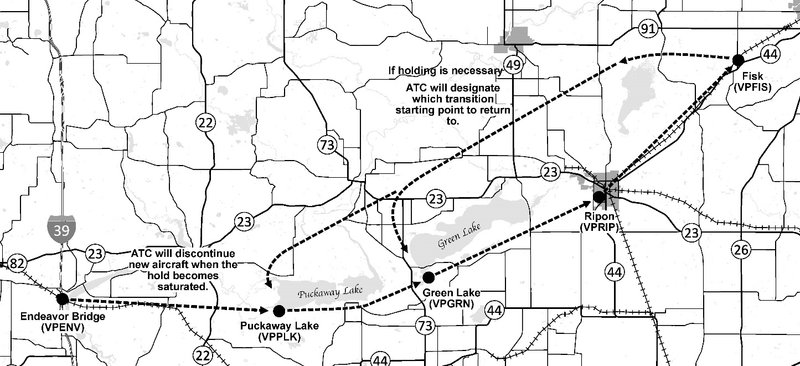

This document, weighing in around 40 pages, forms an effective SHELL Software product, in the SHELL sense of established process. It creates a system for funneling aircraft (of the fixed, rotating, and no-wing varieties) from every point of the compass in a systematic manner to a point roughly over the small town of Fisk, Wisconsin, from which the procedure gets its name (per the NOTAM, look for “steam from the grain drying facility”). From this point, at which aircraft are organized at half-mile intervals into one of several conga lines, each traveling at a prescribed speed and altitude, controllers may efficiently route arriving streams of traffic to any of several runways, a different conga line, return them to the start for another lap to buy time or deconflict congestion, or divert them to a different airport all together. Arrival at Oshkosh requires careful study of this document, solid airmanship, and adherence to the prescribed procedure to work at all, let alone as well as it does.

This last observation highlights the second, and more interesting, reason why this does work. In the zig-zag intersection between Software and Liveware (pt 1), lies a cultural norm that sees the Liveware aircrews wholly and actively utilize the Software procedure.

This is far from a universal human tendency. Setting aside larger anthropic cultural programming (e.g., Americans versus Koreans), and analyzing occupational cultures (e.g. cyclists versus pilots), it’s easy to find arenas where the Software-to-Liveware interface is either weak or non-existent. For instance, like aviation or boating, hiking has a defined set of right-of-way rules. The short version is that cyclists (regardless of the value of the bike or the tightness of the spandex) yield to hikers; everyone yields to equestrians (irrespective of how shabby the horse or slovenly the rider). The uphill-bound hiker or cyclist has the right of way, and the downhill-bound hiker stands to the upsloping side of the trail, where it is safe to do so. Similarly, when overtaking a fellow hiker, announce intentions verbally and pass on the down-sloping side of the trail. Finally, when waiting for an equestrian to pass, stand on the down-sloping side of the trail if possible.

That’s it. Four rules.

The Oshkosh NOTAM takes about an hour to read (and several readings to understand). The rules of hiking right-of-way can be written on a postcard and explained in about 1 minute, even over post-hike drinks. But there’s one critical difference. Almost everyone arriving at Oshkosh follows the NOTAM. Almost no one out hiking observes the rules for right-of-way. I’ve personally been yelled at on the trail by kamikaze downhill bikers, been lectured by otherwise experienced hikers for not mapping my feet to a keep-to-the-right, pass-on-the-left-like-a-highway model of deconfliction, and apparently harshed the mellow of my fellow (albeit herbally altered) outdoorsmen by announcing “Passing Right”.

At first blush, this seems like risk management in action. The errors of air navigation can be profoundly destructive and spectacularly fatal. Conversely, Hippocrates described walking as “man’s best medicine.” The FISK arrival may be the world’s most extreme example of deliberately bringing a significant number of 100mph+ objects into close proximity. Hiking trails are favored by those who wish to maximize their separation from other humans, whether slow or fast-moving. On further reflection, the risk consequence becomes more congruent. Getting hit by a 40 mph cyclist can also be fatal to either hiker, cyclist, or both. The quantity of post-crash flames aside, pilots, passengers, hikers, and cyclists are equally dead in this comparison. While the apparent magnitude of risk, the thing that erroneously makes a fall from 30 feet look safer than a fall from 30,000, may differ, the equally dead observation levels the actual magnitude of risk.

We may also be tempted to attribute this to norming of behaviors from other pursuits. Almost all hikers are driving adults (or in the company of driving adults), which makes mapping road rules from a uniform, engineered surface populated by vehicles engineered to operate on the same, to the irregular terrain of trails. Code switching, safely transitioning from one set of Software to another, consciously breaks this habit. Abusing the right-of-way examples, in-flight seaplanes operate from a different set of right-of-way rules than when on the water, where boat rules apply. Seaplane crews, by and large, can successfully code-switch during a 1-foot change in altitude from boat rules to airplane rules, and back again.

So what is the lesson from FISK Arrival? What do we get from an official US Government publication, one that trades the normally technical approach guidance of compass vectors and radio beacons for directions such as “All aircraft must visually navigate directly over the railroad tracks from Ripon to Fisk.” Yet successfully enables over 10,000 aircraft arrive in a small city in a matter of hours? What secret sauce do a significant fraction of aviators arriving at Oshkosh have that’s missing from our mountain bikers?

At the intersection of Software and Liveware, the aviators have a cultural norm that emphasizes reading, understanding, and most importantly, following their Software. As their Software changes, the aviators actively modify their behavior to conform to the new processes. At their intersection of Software and Liveware, the hikers and mountain bikers have a blasé view of theirs, in the event they’re aware of these procedures to begin with. The aviators read and understand their 40-page NOTAM; the cyclists, by and large, are oblivious to a set of rules that could be scribbled on a handlebar.

That’s the lesson from the FISK arrival. Culture eats policy for breakfast.

Mediocre Software, reliably executed due to a strong Liveware-Software interface, is dramatically more effective than excellent Software that the involved humans ignore. From a leadership perspective, creating this cultural norm is hard – putting on my Air Force officer hat, I get that. But the immediate action of many leaders is to control risk through more, better, and different policy Software. The same effort, applied to re-norming, actually following the procedures, tends to pay off much better in the long run.

Let me know your thoughts below, and click here to subscribe!

-Jerome

Would you like to embed aviation risk management culture in your organization? Crate of Thunder Aerospace Consulting can help!

- Pun intended. ↩︎

- “Crew” in this context includes single pilot operations, to operators of unmanned systems, and often non-pilot personnel such as flight engineers. ↩︎

- Breifly renamed to a gender-neutral “Notice to Air Operations”, the offical FAA terminology has returned to “Airmen”, but can be used interchangeably ↩︎

Excellent blog post! I’ve always enjoyed the quote (no one is really sure who said it first) “we are to be a nation of few laws and those strictly enforced.” When any endeavor creates myriad laws or rules that make little sense, people begins to ignore them; including important ones. Aviation is still generally not in that camp. I hope it stays that way.